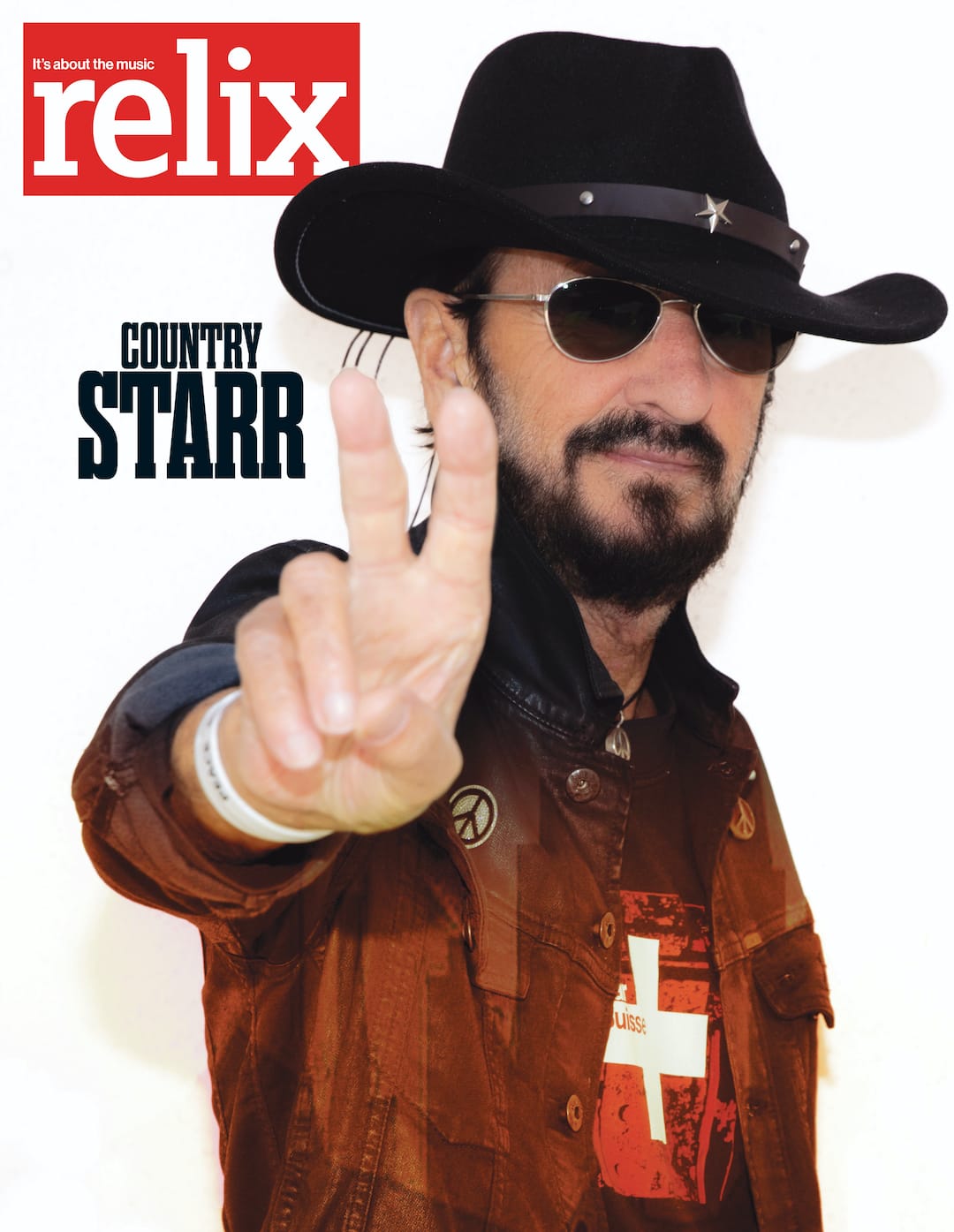

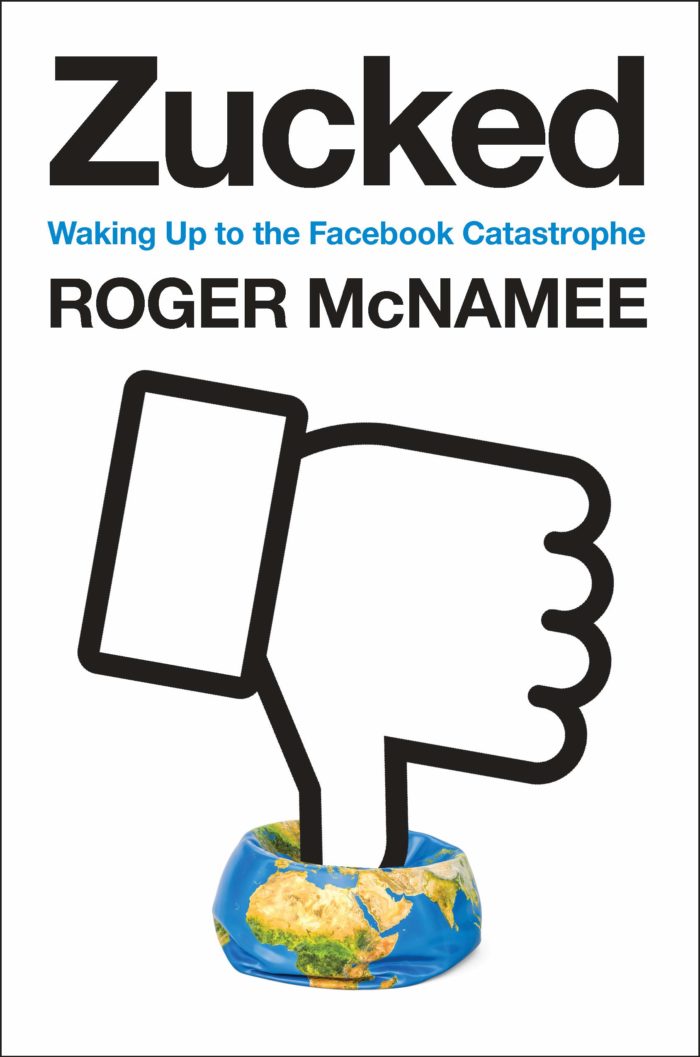

Interview: Moonalice’s Roger McNamee on New Book, ‘Zucked: Waking Up to the Facebook Catastrophe’

In his new book, Moonalice’s Roger McNamee—a longtime technology true believer—raises questions about the dark side of the Facebook business model, which has left the former adviser to the company feeling Zucked up.

In mid-2006, Facebook Chief Privacy Officer Chris Kelly invited venture capitalist Roger McNamee to meet with the then two-year-old company’s founder Mark Zuckerberg. Zuckerberg was only 22 years old and Facebook accounts were still limited to undergrads, alumni with college email addresses and high-school students. At that time, McNamee advised Zuckerberg to retain ownership of Facebook, despite a billion dollar offer from Yahoo. Over the ensuing three years, McNamee continued to counsel the CEO and, as he writes in his new book Zucked: Waking Up to the Facebook Catastrophe, “I liked Zuck. I liked his team. I was a fan of Facebook… Mentoring is fun for me, and Zuck could not have been a better mentee.”

During this era, McNamee even recommended Sheryl Sandberg for the position of Facebook’s chief operating officer. McNamee, who may be most familiar to Relix readers through his role as the frontman of Moonalice and Doobie Decibel System, had first encountered Sandberg via a music project. In 1999, he worked with Grateful Dead Productions to develop the online merchandise platform he dubbed Bandwagon. U2 expressed interest in it and the group reached out to McNamee via Bono’s friend Sandberg, who was then chief of staff to Treasury Secretary Larry Summers and had heard of McNamee through financial circles. McNamee later met Bono and The Edge, the day after they earned a Grammy for “Beautiful Day,” and in Zucked, he reveals, “I could not have named a U2 song, but I was blown away by the intelligence and business sophistication of the two Irishmen.” Although the expansive plans for Bandwagon were never fully realized, Bono eventually joined McNamee as a co-founder of Elevation Partners, which acquired Facebook stock in late-2009, less than three years before the company’s IPO, thanks to McNamee’s sessions with Zuckerberg. (McNamee, Bono and Elevation Partners co-founder Marc Bodnick also made personal investments in 2007.)

McNamee remained a Facebook champion long after he ceased working with Zuckerberg, but he hadn’t spoken with the CEO for seven years, until a series of events prompted him to send an email to Zuckerberg and Sandberg 10 days prior to the 2016 election. He’d had come to suspect that “bad actors were exploiting Facebook’s architectural and business model to inflict harm on innocent people.” The two Facebook execs responded to him but dismissed his concerns, suggesting that the company had already identified and mitigated any potential harm. As McNamee chronicles in Zucked, he would discover that the peril was and is far more profound than he had initially suspected. He contends that this business model not only threatens its users’ mental health, but also the nation’s democratic ideals. “Facebook is the fourth most-valuable company in America,” he writes, “despite being only 15 years old, and its value stems from its mastery of surveillance and modification.”

Zucked is an absorbing read that not only offers a diagnosis but also shares correctives, both for individuals and the government.

You’ve always been such a positive spirit and a tech optimist. Has the journey that you describe in your book altered that perspective?

It was huge shock being confronted with the architectural flaws of Google and Facebook, two companies I have known since their early days. To see that there was something really wrong there was 180 degrees from what I always believed about them. But the thing to take away from this is that I actually still have the same positive, optimistic attitude I’ve always had because I can see where we should go.

It’s simple how we get there. I believe users have far more power to solve this problem than they realize. And all that’s missing is somebody to help them see that power so they know how to exercise it. But the basic way to look at it is: Politicians know they need to do something about this. However, what they need is for consumers/users to say to them: “This is important; we believe in it.” So we all, as individuals, have political power.

But the second far more important thing is that Facebook and Google depend on our attention for their business model. Without our attention, they have nothing. And so, if we just change our behavior, even a little bit, it will have an impact. Facebook and Google are important; they do good things, and we love them most of the time. But the trick is to accept that a product that you love individually can be really dangerous for humanity.

So my observation is this: If I told you that by making small changes in your behavior, you could contribute to restoring democracy, and protecting your own mental health and that of your children—you could regain the ability to make choices without fear, have real privacy and also contribute to rebuilding our entrepreneurial economy—would you give up some of the convenience of products made by Facebook and Google to do that?

Again, I’m not saying you have to abandon them, but I’m just saying: Change your usage. Would you make the moves that give you the power? Which, very simply put, would be no longer letting them press your emotional buttons, particularly those related to fear and outrage—that you not engage in politics on these platforms, that you not get your news from these platforms, that you use these platforms for what they were originally intended for, which is to stay in touch with family and friends and organize events. If everyone just did a little bit of that, this would go very quickly. And that is what I’m trying to do.

Another important concern in tech is that we have allowed monopolies to develop—I would argue that Facebook, Google and Amazon are all monopolies—and among many things, these monopolies have put a damper on innovation. We’re running an experiment of depending on monopolies for every new technology that would drive our economy. There aren’t any examples in history of that working, so I recommend we stop doing that.

You mention giving up convenience. I wonder how challenging that will be, given the pace of society and the expectations of longtime users.

I have a hypothesis that I’d like to try out on you. I wonder if it’s possible that there is actually some human evolutionary driver that makes us choose convenience even when we know the consequences might be bad for us. I need to ask an evolutionary biologist because I think everyone chooses convenience, not just Americans. Again, I’m not saying to get rid of convenience; I’m just suggesting that we give up some of it.

I’ve been running this experiment for a while now. Back in the day, I used to carry seven mobile devices. I was a true believer in technology; I trusted it completely. For the last year and a half, I’ve been changing my behavior just to see if I can do it.

It’s been really interesting because I still use Facebook, but I use it really differently than I did before. I used to do a lot of politics on Facebook and I used to let people push my emotional buttons. I don’t do any of those things anymore. Sometimes I have to bite my tongue, but that’s what I do.

I also have stopped using Google products entirely. For me, Google is like the river in the game Frogger, and the alternative products are the logs that go by. I have to hop from log to log to get across. I treat it like a video game. If I inadvertently press on Google access in some app that I’m using, I penalize myself. I go back a level, and start again. You know how they have those signs—“How many days since the last accident?” Well, I have: “How many days have I gone since my last inadvertent touch with a Google app.” Right now, I’m back in single digits. I had gone almost three months, and then annoyingly hit a Google map in some restaurant app.

Even if there was no malicious intent, the point at which Facebook authorized an academic study to alter users’ feeds in order to gauge the impact on their emotions feels like crossing a line.

But, keep in mind, what I’m saying here is that when they did that, they were acting as engineers. Somebody said, “We should just test this to see what happens.” They didn’t think of it as controversial. I’m not saying it’s good. What I’m saying is it’s understandable. The problem here is when all the people in the company have the same education, the same value systems and the same experiences, you don’t have the intellectual rigor that would protect you in a situation like that.

I could imagine that when Stephen Bannon came to Facebook with Cambridge Analytica, he said, “We can revolutionize election advertising on Facebook.” The idea was so clever—I mean incredibly cynical, terrifyingly clever—that I’m sure the engineers at Facebook were like, “Wow, he really does have a better idea. We’re engineers; let’s figure out if it works.” And I bet it would have never occurred to them how it would affect the outcome. I bet it never occurred to them to think that there might be a moral or legal problem there. You can say, “Well, that makes them bad people,” but I would argue that the flaw was in not having anyone in the company whose experience was different enough to be able to see that there was another side to that issue.

But again, that does not make them evil. That just means they were the wrong people to be running this company. You can accuse me of being charitable to these people, but remember that I knew them all, and they were all good people when I knew them. I’m not saying they’re not capable of doing bad things—they obviously have done a ton of bad things—but I don’t think they did bad things to be bad. I think they did bad things because they didn’t know any better.

I look at what happened in 2016 as a situation in which they didn’t have the wisdom to appreciate that all these experiments they were running could go horribly wrong. I think, to some degree, they were surprised by what happened and it took them a while to do the investigation internally. But my real objection—the part that really makes me angry—is that, at some point in 2017, they knew for sure that they had affected the outcome of the 2016 election, and were perhaps decisive in the outcome of the election.

At some point in 2017, they knew that their data-sharing policies had really harmed users. At some point in 2017, they had to understand that their persuasive technologies had been used to manipulate what people believed through filter bubbles. And at some point, they had to understand that their practices were limiting competitors—there weren’t any competitors out there—so they were hurting innovation. It’s when they knew all those things—again I would interpret that as around 2017, from that point forward—they had absolute consciousness of the flaws, and therefore their behavior is inexcusable.

Moonalice has been active over the years in connecting with fans through social media. Have your recent experiences led you to change that approach?

Ten years ago, Moonalice made the decision that we were going to have all our informational relationships with our fans on Facebook. And we were going to use our website as an archive of our live shows. That was unbelievably successful for us. We haven’t changed that because our fans still like to use it, and nobody gets hurt from that. That’s the good part of Facebook. This is a core point I’m trying to make here: I’m not angry at Facebook because I think they’re bad people. I’m saying the business model has unintended consequences for society in terms of the impact on public health, on democracy, on privacy and on innovation that are unacceptable.

I don’t want to kill Facebook, I just want to save democracy, public health, privacy and innovation. What I hope is that the folks at Facebook will get a good night’s sleep, and wake up one morning like, “What the hell are we doing? Let’s fix it.” I mean, they can’t fix democracy—some of these problems have gotten so big that there isn’t a technology solution.

For me, in terms of promoting the book, I’m promoting it on Facebook, Instagram, Google and Amazon. Why am I doing that? One, it’s where the audience is. But two, those are also the people who most need to hear my message. To me, that’s totally logical and I don’t see any inconsistencies there. I know people say, “That’s so weird you’re promoting on Facebook.” Then I go, “But keep in mind, these guys did not set out to hurt anybody.” They just got so fixated on connecting everybody in the world. They were convinced—in Google’s case, that collecting all the world’s information, and in Facebook’s case, connecting the whole world—that those goals were so important that they should find any means to get there. And that’s where the problem came in. It took a long time before the problems became obvious. And by the time they became obvious, the companies had completely lost their ability to be objective about their business model and business practices.

The thing that’s really obvious is that Facebook, Instagram, WhatsApp, Google, YouTube and Twitter are so important to our culture, to our politics, to music, to everything, that the best thing we can do is to find a way to get rid of all the harmful parts while keeping all the good parts. That’s my goal.

At the end of the book, you write, “Bringing people together in the real world is the perfect remedy for addiction to internet platforms. If we can do that, the world will be a better place.” One place where that happens is the live performance setting.

I believe strongly that live music is the perfect example of what we need to do to repair our broken culture in America. We need to find things that we share. We need to find our common humanity but also the fact that we have all of these interests in common.

I think we have a ton in common but that recognizes that every human being on earth has a right to self-determination. And the great thing about concerts is anybody can go and it’s a place where you don’t know the politics of the person next to you—you don’t know where they went to school, you don’t know how much money they earn, which is the way it should be. What you understand is you love the music of that artist.

In my mind, we need to do that everywhere. In schools, we need to teach that hate has no place in America and that discrimination has no place and that cruelty has no place. We already know that’s true at concerts. We need to take the live-music ethos and spread it all around our culture and all around our economy.

This is, in many ways, the centerpiece of my philosophy on this: If I weren’t a musician, if I didn’t get to see the bands I love, there would be almost no place in my life where I got to see the world I want to live in.

This article originally appears in the March 2019 issue of Relix. For more features, interviews, album reviews and more, subscribe here.